More about APIs and Docker

Lecture 21

Cornell University

INFO 4940/5940 - Fall 2024

November 19, 2024

Announcements

Announcements

- Project draft

Learning objectives

- Introduce a container model for deploying models as API

- Define key terms for working with containers and Docker

- Implement Docker containers for model APIs

- Authenticate with cloud storage platforms for model versioning

- Expand {vetiver} model APIs through additional metadata and endpoints

Where does {vetiver} work?

Posit’s pro products, like Connect

AWS SageMaker (R only, for now)

A public or private cloud, using Docker

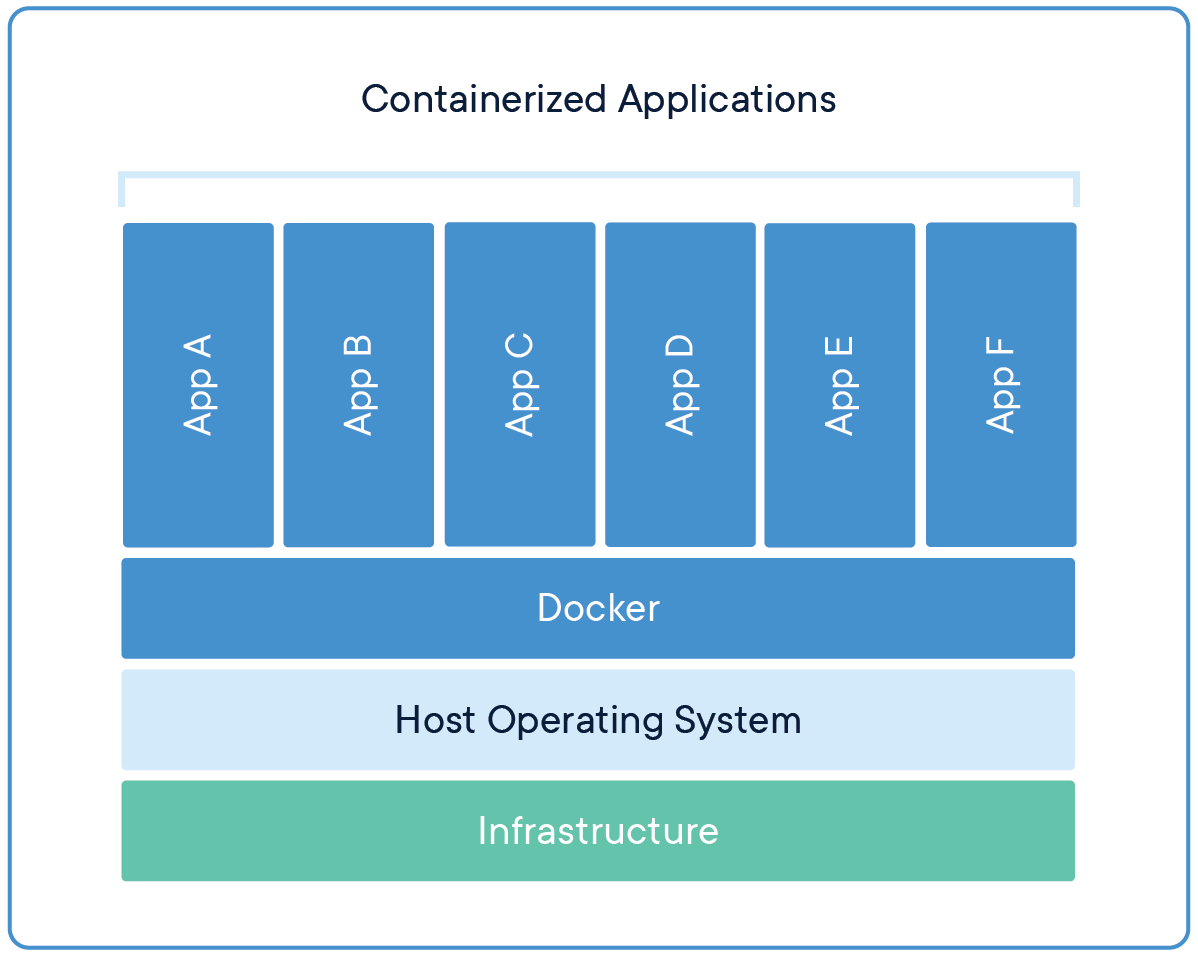

Docker

Containerized environments for your code

Virtual machine

Image credit: What is a container?

Container

Image credit: What is a container?

Benefits to containers

- Lightweight

- Portable

- Consistent

- Scalable

- Secure

Why Docker?

- Open source

- Reproducible

- Bring your own container philosophy

Create Docker artifacts

Start with a trained and versioned model

- Dockerfile

- Model dependencies, typically

renv.lock - File to serve API, typically

plumber.R

Create Docker artifacts

Start with a trained and versioned model

Dockerfiles for {vetiver}

# Generated by the vetiver package; edit with care

FROM rocker/r-ver:4.4.0

ENV RENV_CONFIG_REPOS_OVERRIDE https://packagemanager.rstudio.com/cran/latest

RUN apt-get update -qq && apt-get install -y --no-install-recommends \

libcurl4-openssl-dev \

libicu-dev \

libsodium-dev \

libssl-dev \

make \

zlib1g-dev \

&& apt-get clean

COPY vetiver_renv.lock renv.lock

RUN Rscript -e "install.packages('renv')"

RUN Rscript -e "renv::restore()"

COPY plumber.R /opt/ml/plumber.R

EXPOSE 8080

ENTRYPOINT ["R", "-e", "pr <- plumber::plumb('/opt/ml/plumber.R'); pr$run(host = '0.0.0.0', port = 8080)"]Build your container

If you have an Apple Silicon Mac

Add the --platform linux/amd64 to install R packages from compiled binaries rather than source.

Run your container

Make predictions

Application exercise

ae-20

- Go to the course GitHub org and find your

ae-20(repo name will be suffixed with your GitHub name). - Clone the repo in RStudio, run

renv::restore()to install the required packages, open the Quarto document in the repo, and follow along and complete the exercises. - Render, commit, and push your edits by the AE deadline – end of the day

⏱️ Your turn

Activity

Create a Docker container for your model using a local board board_local().

Build the Docker container and run it locally. Make predictions using the API.

07:00

{pins} 📌

The {pins} package publishes data, models, and other R objects, making it easy to share them across projects and with your colleagues.

You can pin objects to a variety of pin boards, including:

- a local folder (like a network drive or even a temporary directory)

- Amazon S3

- Azure Storage

- Google Cloud

Use board_gcs() to connect to Google Cloud Storage

Authenticating with Google Cloud Storage

service-auth.json

⏱️ Your turn

Activity

Create a Docker container for your model using a Google Cloud Storage board board_gcs().

Build the Docker container and run it locally. Make predictions using the API.

07:00

Building {vetiver} Docker artifacts

vetiver_prepare_docker() decomposes into two major functions:

vetiver_write_plumber()to create a Plumber filevetiver_write_docker()to create a Dockerfile and {renv} lockfile

Requires additional tinkering with plumber.R and Dockerfile to work successfully

⏱️ Your turn

Activity

Create a Docker container for your model using a Google Cloud Storage board board_gcs().

Ensure the Docker container is correctly configured to use {googleCloudStorageR}.

Build the Docker container and run it locally. Make predictions using the API.

07:00

Docker resources

Model metrics as metadata 🎯

Model metrics as metadata

library(tidyverse)

library(tidymodels)

housing <- read_csv("data/tompkins-home-sales-geocoded.csv")

set.seed(123)

housing_split <- housing |>

mutate(price = log10(price)) |>

initial_split(prop = 0.8)

housing_train <- training(housing_split)

housing_test <- testing(housing_split)

rf_rec <- recipe(price ~ beds + baths + area + year_built + town, data = housing_train) |>

step_impute_mean(all_numeric_predictors()) |>

step_impute_mode(all_nominal_predictors())

housing_fit <- workflow() |>

add_recipe(rf_rec) |>

add_model(rand_forest(trees = 200, mode = "regression")) |>

fit(data = housing_train)Model metrics as metadata

Model metrics as metadata

Model metrics as metadata

- We pin our {vetiver} model to a board to version it

- The metadata, including our metrics, are versioned along with the model

⏱️ Your turn

Activity

Compute metrics for your model using the testing data.

Store these metrics as metadata in a {vetiver} model object.

Write this new {vetiver} model object as a new version of your pin.

05:00

Model metrics as metadata

How do we extract our metrics out to use them?

⏱️ Your turn

Activity

Obtain the metrics metadata for your versioned model.

What else might you want to store as model metadata?

How or when might you use model metadata?

07:00

Add a new endpoint to your API ✨

Add a new endpoint to your API

- A lot of code is being generated throughout this deployment process

- You have access to that code and can alter it yourself!

- The {vetiver} framework has sensible defaults but is extensible for more complex use cases

- What really sets up your model API?

Add a new endpoint to your API

# Generated by the vetiver package; edit with care

library(pins)

library(plumber)

library(rapidoc)

library(vetiver)

library(googleCloudStorageR)

# Packages needed to generate model predictions

if (FALSE) {

library(parsnip)

library(ranger)

library(recipes)

library(workflows)

}

b <- board_gcs("info-4940-models", prefix = "bcs88/")

v <- vetiver_pin_read(b, "tompkins-housing", version = "20241118T160824Z-48c64")

#* @plumber

function(pr) {

pr %>% vetiver_api(v)

}Add a new endpoint to your API

- You add more endpoints in your Plumber app file

plumber.R - It is ultimately up to you to decide what your API’s endpoints should be!

Add a new endpoint to your API

# Generated by the vetiver package; edit with care

library(pins)

library(plumber)

library(rapidoc)

library(vetiver)

library(googleCloudStorageR)

# Packages needed to generate model predictions

if (FALSE) {

library(parsnip)

library(ranger)

library(recipes)

library(workflows)

}

b <- board_gcs("info-4940-models", prefix = "bcs88/")

v <- vetiver_pin_read(b, "tompkins-housing", version = "20241118T160824Z-48c64")

#* @plumber

function(pr) {

pr %>% vetiver_api(v)

}Add a new endpoint to your API

# Generated by the vetiver package; edit with care

library(pins)

library(plumber)

library(rapidoc)

library(vetiver)

library(googleCloudStorageR)

library(DALEXtra)

library(dplyr)

# Packages needed to generate model predictions

if (FALSE) {

library(parsnip)

library(ranger)

library(recipes)

library(workflows)

}

b <- board_gcs("info-4940-models", prefix = "bcs88/")

v <- vetiver_pin_read(b, "tompkins-housing", version = "20241118T160824Z-48c64")

explainer <- pin_read(b, "tompkins-housing-shap")

handler_explain <- function(req) {

new_data <- req$body

new_data <- vetiver_type_convert(new_data, v$prototype)

shap <- predict_parts(explainer, new_data, type = "shap", B = 25)

shap |>

group_by(variable) |>

summarize(contribution = mean(contribution))

}

#* @plumber

function(pr) {

pr |>

vetiver_api(v) |>

pr_post(path = "/explain", handler = handler_explain)

}⏱️ Your turn

Activity

Add a new endpoint to the API app file you already made.

Run the app locally and check out your new endpoint.

How might you want to use an additional endpoint?

07:00

Wrap-up

Recap

- Docker containers are a lightweight, portable, and consistent way to deploy code

- {vetiver} can help you create Docker artifacts for your models

- Model metrics can be stored as metadata in {vetiver} models

- You can add new endpoints to your API to provide additional functionality

Acknowledgments

- Materials derived in part from Intro to MLOps with {vetiver} and licensed under a Creative Commons Attribution 4.0 International (CC BY) License.